In the world of modern software development, speed and scalability are critical factors for delivering a superior user experience. Distributed caching is a powerful technique that plays a pivotal role in achieving both of these objectives.

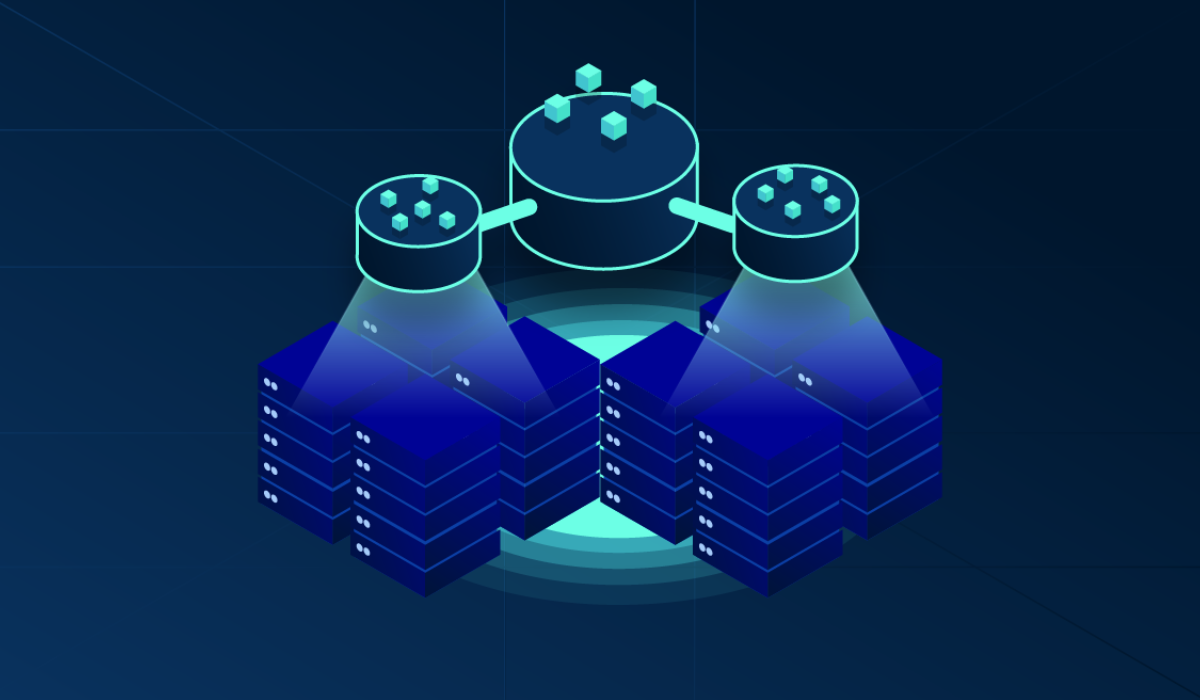

Caching is the practice of storing frequently accessed data in a high-speed data store to reduce the time and resources required to fetch it from a slower source, such as a database or an external API. Distributed caching takes this concept a step further by distributing cached data across multiple nodes or servers, enabling better performance, scalability, and fault tolerance for applications.

Distributed caching systems work by storing frequently used data in memory, making it readily available to the application without the need to perform time-consuming data retrieval operations from the original data source.

Pros of Distributed Caching

- Improved Performance : By reducing the need to repeatedly fetch data from slower storage systems, distributed caching significantly improves application performance. Response times decrease, resulting in a more responsive user experience.

- Scalability : Distributed caching systems can easily scale horizontally by adding more cache nodes, allowing applications to handle increased loads without a proportional increase in latency.

- Fault Tolerance : Distributed caching systems are designed with redundancy and fault tolerance in mind. If one cache node fails, others can continue serving data, ensuring minimal disruption to the application.

- Reduce Load on Data Sources: Caching reduces the load on backend data sources, such as databases or APIs, which can help prevent overloading these systems during traffic spikes.

Use cases of Distributed Caches

- Database Caching : Caching database query results can significantly reduce database load and improve application responsiveness.

- Content Deliver : In content delivery networks (CDNs), distributed caching is used to store and serve static assets like images, videos, and web pages closer to the end user, reducing latency.

- Session Management : Distributed caching helps manage user sessions in web applications, enhancing user authentication and authorization processes.

- Real-Time Data : In real-time applications, such as stock trading platforms, distributed caching ensures quick access to current data, reducing delays.

- API rate limiting : Distributed caching can be used to enforce rate limits on APIs, preventing abuse and ensuring fair usage.

Strategies for Distributed Caching

Different strategies exist for implementing distributed caching in applications, depending on specific use cases and requirements.

- Cache-Aside (Lazy Loading) : In this strategy, the application code is responsible for loading data into the cache when needed. If the data is not in the cache, it is fetched from the data source and then stored in the cache for future use.

- Write-Through (Write-Behind) : This strategy involves writing data to both the cache and the underlying data source simultaneously. It ensures that the cache remains consistent with the data source.

- Read-Through : Read-through caching automatically loads data into the cache from the data source when requested by the application.

- Cache-Aside (Eager Loading) : With eager loading, the application preloads data into the cache, anticipating future requests. This can be useful when dealing with data that is highly predictable.

- Cache Invalidation : Cache invalidation involves removing or updating cached data when it becomes stale or obsolete. Techniques such as time-based expiration or event-driven invalidation can be used.

Popular Distributed Caching Tools

Several distributed caching tools and platforms are widely used in the industry.

- Redis : Redis is an in-memory data store that supports various data structures and is often used as a distributed cache due to its speed and versatility.

- Memcached : Memcached is another popular in-memory key-value store, known for its simplicity and speed. It’s often used for caching web content and session data.

- Apache Kafka : While Kafka is primarily a distributed streaming platform, it can be used for caching real-time data streams, making it suitable for specific caching scenarios.

- Hazelcast : Hazelcast is an open-source in-memory data grid that provides distributed caching capabilities and is designed for high availability and scalability.

Best Practices for Distributed Caching

To maximize the benefits of distributed caching, consider the following best practices.

- Cache Size Management: Monitor and manage the cache size to prevent excessive memory usage.

- Cache Eviction Policies: Implement appropriate cache eviction policies to remove less-used data from the cache.

- Data Serialization: Ensure proper data serialization to store complex data types in the cache.

- Monitoring and Logging: Use monitoring and logging tools to track cache performance and diagnose issues.

- Versioning and Tagging: Implement versioning and tagging for cached data to ease cache invalidation.

- Cache Backups: Consider backups or replication for cache data to prevent data loss.

Challenges and Considerations

While distributed caching offers numerous benefits, it also comes with challenges and considerations.

- Cache Consistency: Maintaining data consistency between the cache and the data source can be complex.

- Cache Invalidation: Deciding when and how to invalidate cached data to ensure it remains up-to-date.

- Cache Warming: Pre-populating the cache can be resource-intensive and may require careful planning.

- Data Eviction: Selecting the right cache eviction policy to balance memory usage and cache hit rates.

- Distributed Systems: Handling distributed systems’ complexities, such as network partitions and node failures.

Distributed caching is a vital component in modern software architecture, enabling applications to achieve enhanced performance, scalability, and fault tolerance. By implementing caching strategies and choosing appropriate tools, developers can harness the power of distributed caching to create faster and more responsive applications, ultimately delivering a better user experience. However, it’s essential to understand the challenges and considerations involved to make informed decisions and optimize caching systems effectively.

Stay tuned for future articles where we delve deeper into different distributed cache implementation techniques, providing insights into advanced strategies and best practices to further optimize your applications.